MCP

Understanding MCP (Model Context Protocol): A Bridge Between AI and the Real World

Introduction

Modern AI models like Claude, ChatGPT, and others are powerful, but they face a common challenge: they don’t have direct access to the real-world data you want them to process — such as project files, databases, APIs, or third-party services like GitHub or Slack.

That’s where MCP (Model Context Protocol) comes in. Think of it as a protocol that lets AI models talk to real-world systems in a secure, unified, and flexible way — just like a USB port lets you plug almost anything into your computer without needing a separate connector for each device.

This article explains MCP from the ground up, how it works, and how you can use it with real data sources. We’ll even include a practical code example.

What is MCP?

MCP stands for Model Context Protocol, created by Anthropic (the creators of Claude, and former OpenAI researchers). It’s an open protocol that enables large language models (LLMs) to retrieve context or take actions using data from:

Databases (SQL, NoSQL)

Web APIs (like GitHub, Jira, Slack)

File systems

Spreadsheets or documents

Instead of writing custom integration code for every new data source, MCP allows AI models to connect to these sources through a standard protocol.

Why Do We Need MCP?

Let’s say you’re building an AI assistant that answers questions about your project:

“What’s the status of feature XYZ in my repo?”

To answer that, the AI would need access to:

The project’s GitHub issues

The commit history

Maybe related Slack discussions

In the past, you’d have to:

Build a custom integration for each service.

Manage authentication, parsing, rate limits, etc.

Manually fetch and format the data.

With MCP, all this becomes much simpler. The AI can send one standardized request, and MCP handles the rest.

How MCP Works

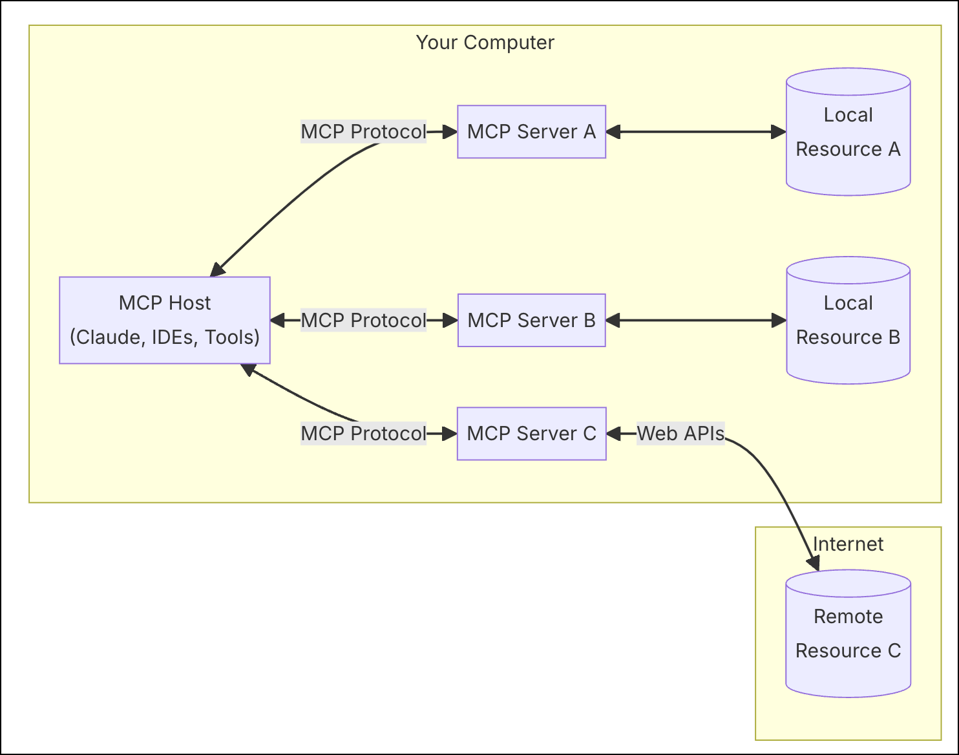

MCP is based on a client-server architecture.

Client: The AI model (e.g., Claude or a local LLM) that needs data.

MCP Host: A middleware process that handles communication between the AI and the outside world.

MCP Server: A service connected to a specific data source (e.g., a database, file system, or API).

Workflow:

The AI generates a request (e.g., “fetch open GitHub issues”).

MCP Host forwards this request to the appropriate MCP Server.

The MCP Server communicates with the actual data source.

The result is passed back to the AI.

Here’s a simple visual breakdown of this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

Your computer

┌────────────┐

│ MCP Host │

└────┬───────┘

│

│ MCP Protocol

▼

┌────────────┐ ┌────────────┐

│ MCP Server │ <--> │ Database 1 │

└────────────┘ └────────────┘

┌────────────┐ ┌────────────┐

│ MCP Server │ <--> │ Web API │ (e.g., GitHub)

└────────────┘ └────────────┘

Features of MCP

Unified Protocol: One way to access many types of data.

Security Built-In: Handles credentials, access control, and sandboxing.

Open Source: Extendable by developers.

Highly Flexible: Works with databases, files, APIs, and more.

Real-World Use Case with Code Example

Let’s imagine you have a PostgreSQL database of customer support tickets, and you want your AI model to answer:

“How many open tickets are pending right now?”

Instead of integrating PostgreSQL directly into your LLM, you run an MCP Server that connects to the database.

Step 1: Set Up the MCP Server

You can run an MCP server using Python:

1

pip install mcp-server

Then create a basic handler:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# server.py

from mcp_server import MCPServer

import psycopg2

def handle_request(request):

if request["action"] == "get_open_tickets":

conn = psycopg2.connect("dbname=support user=admin password=123")

cur = conn.cursor()

cur.execute("SELECT COUNT(*) FROM tickets WHERE status = 'open';")

count = cur.fetchone()[0]

return {"open_tickets": count}

server = MCPServer(port=9000)

server.add_handler("ticket_query", handle_request)

server.start()

Step 2: Let the AI Ask via MCP Host

The AI doesn’t need to know the SQL, credentials, or schema — it just sends this:

1

2

3

4

{

"service": "ticket_query",

"action": "get_open_tickets"

}

And MCP does the rest. The AI receives:

1

2

3

{

"open_tickets": 42

}

Now the model can respond:

“There are currently 42 open support tickets.”

Potential Applications

Project status updates: AI reads your Git repo and summarizes ongoing work.

Business insights: Pulls sales data from a database to generate reports.

Slack/Discord integration: Automatically sends alerts based on logic or LLM outputs.

Developer tools: Code review assistants that pull in context via MCP.

Security Considerations

Like any integration protocol, MCP has risks:

Prompt Injection: Malicious data can trick the AI into doing something unintended.

Over-permissioning: Ensure the AI has only the access it truly needs.

Authentication: Use secure tokens or role-based access control.

Challenges Ahead

Still new: Adoption is early. It may take time to become widely used.

Learning curve: Setting up your first MCP server can be tricky.

Performance: Real-time access to large datasets may require optimization.

Conclusion

MCP is a game-changer for connecting large language models to real-world systems. It abstracts away the hard parts of integration and gives developers a secure, unified, and powerful way to bring real context to AI models.

Whether you’re building an AI assistant, automating business workflows, or analyzing complex data — MCP is the missing bridge between your AI and your data.

You can learn more at the official site: https://modelcontextprotocol.dev